In this project, our assigned task was to build a computer vision system to get the coordinates of a single box given an image using a segmentation method.

This task is a simplified version of Bin Picking which is a core problem in computer vision and robotics.

DeepLab Model

In this first attempt, the team along with my group mate, Isiri Withanawasam was asked to refer to Semantic Segmentation model : DeepLab paper and implement the model for our application.

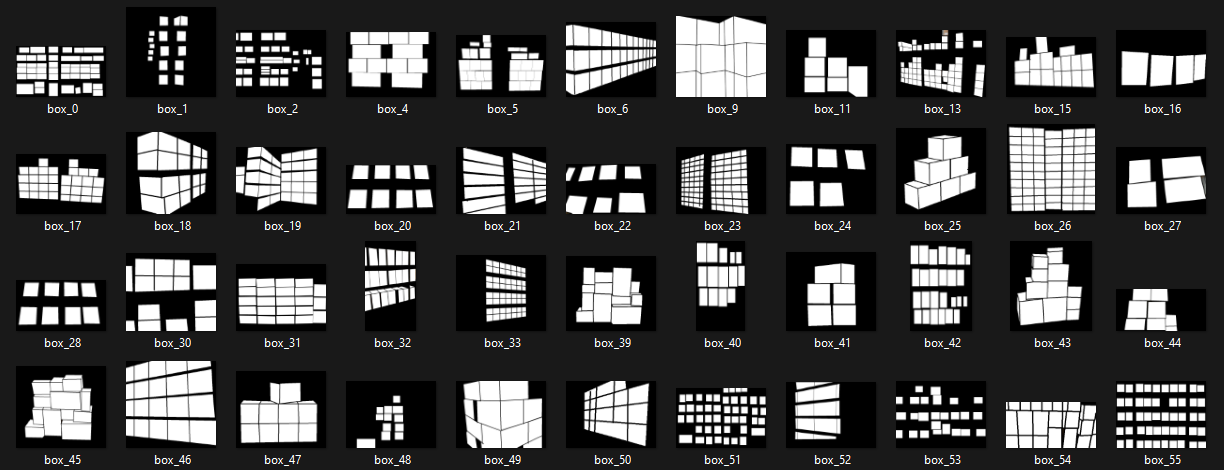

Since the DeepLab model is not trained on any segmentation dataset which contains box images, a dataset of about 100 annotated images is created.

Then the DeepLabV3 model was trained on our custom dataset using transfer learning. This is the result we got after 25 epochs.

Since we were not satisfied with the results, we found the paper SAM model and moved onto using it according to the advice by our supervisor.

SAM Model

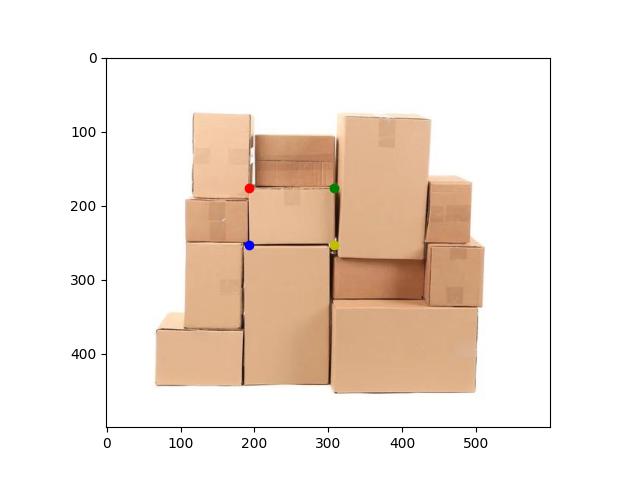

Using this model (Segment Anything by MetaAI), we could get the desired output we were looking for. The results were taken in Google Colab using a NVIDIA Tesla T4 GPU.

Fast SAM Model

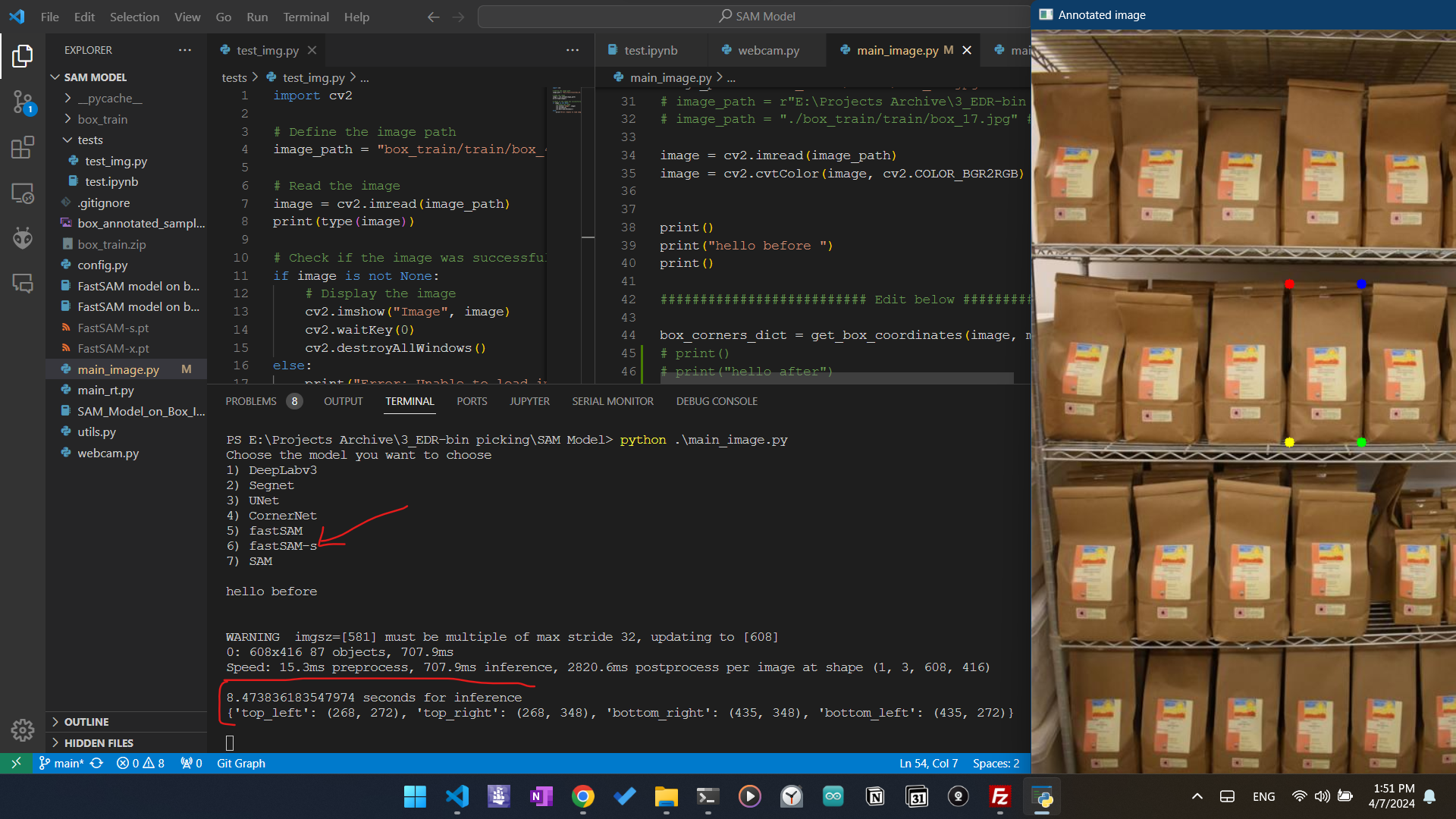

Since we want a real time inference of the model, and our computation to happen on an edge device. Therefore we wanted to use the model with Fast SAM. With this model we could get the result even in edge with less time using CPU compared to the SAM model sacrifising some accuracy and gaining advantage of inference time.

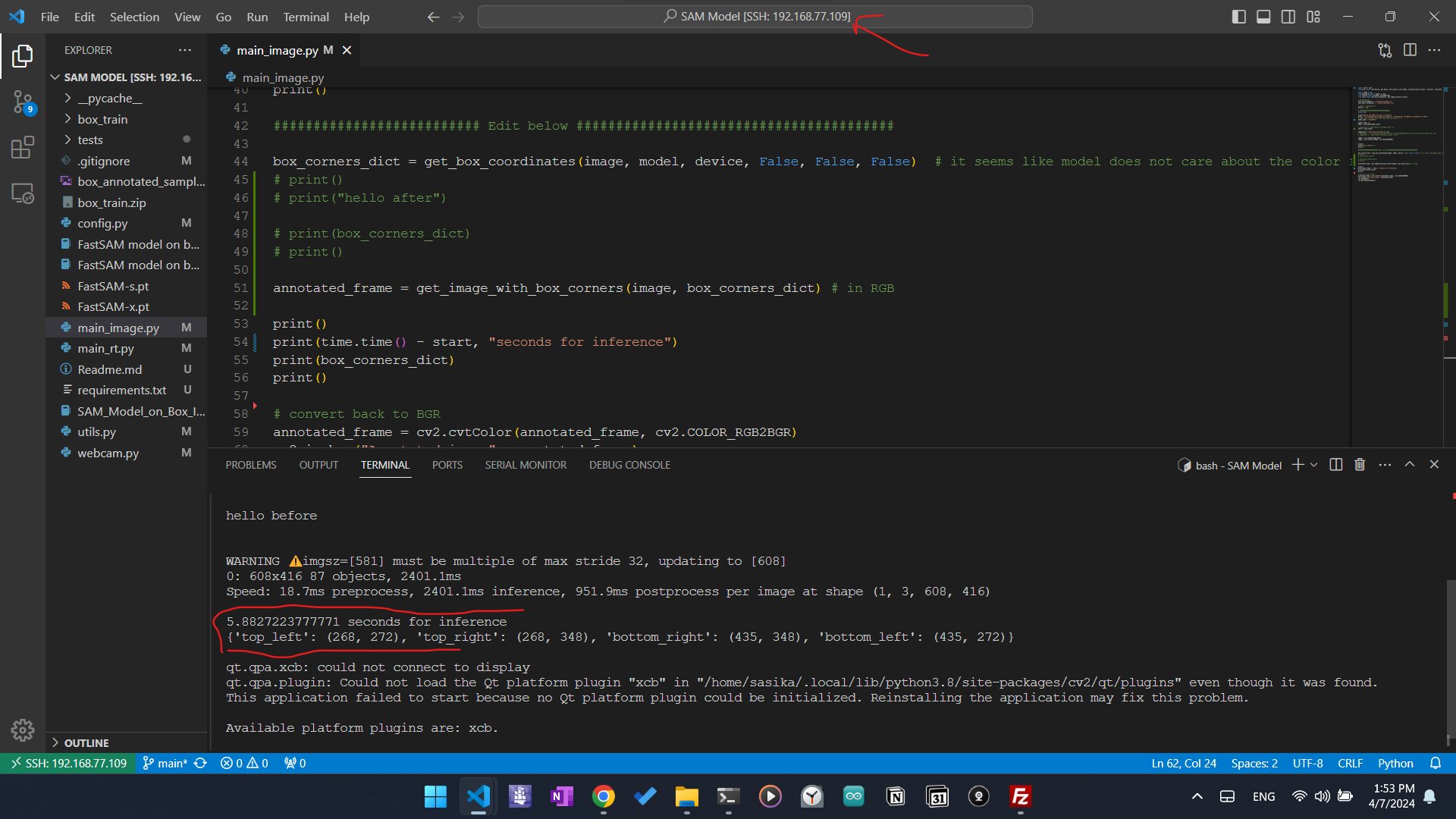

On Local Machine

On Raspberry PI 4B

Note : The image in Raspberry Pi is not shown because, Ubuntu Server was running in the Raspberry Pi 4B with no GUI. That is the reason for QT error.

Camera integration & Multithreading

To decrease the time for inference we use multithreading to get input imags from the camera in one thread and buffer them. And do the computation in another thread to get the output. See the latest commit in old branch in the github repository. (not yet merged to main branch)

Future Improvements

By the time I am writing this, we have successfully completed the assigned task. As part of the hardware project we have created a custom gripper.

After the evaluation day, we hope to integrate this vision system and the custom gripper with an industrial robot arm using ROS2, going the extra mile.

We have already checked two robot arms available in our university’s Electrical Engineering Department. Here are those robot arms.

Specifications of the above robot arms